3D-aware Recursive Diffusion

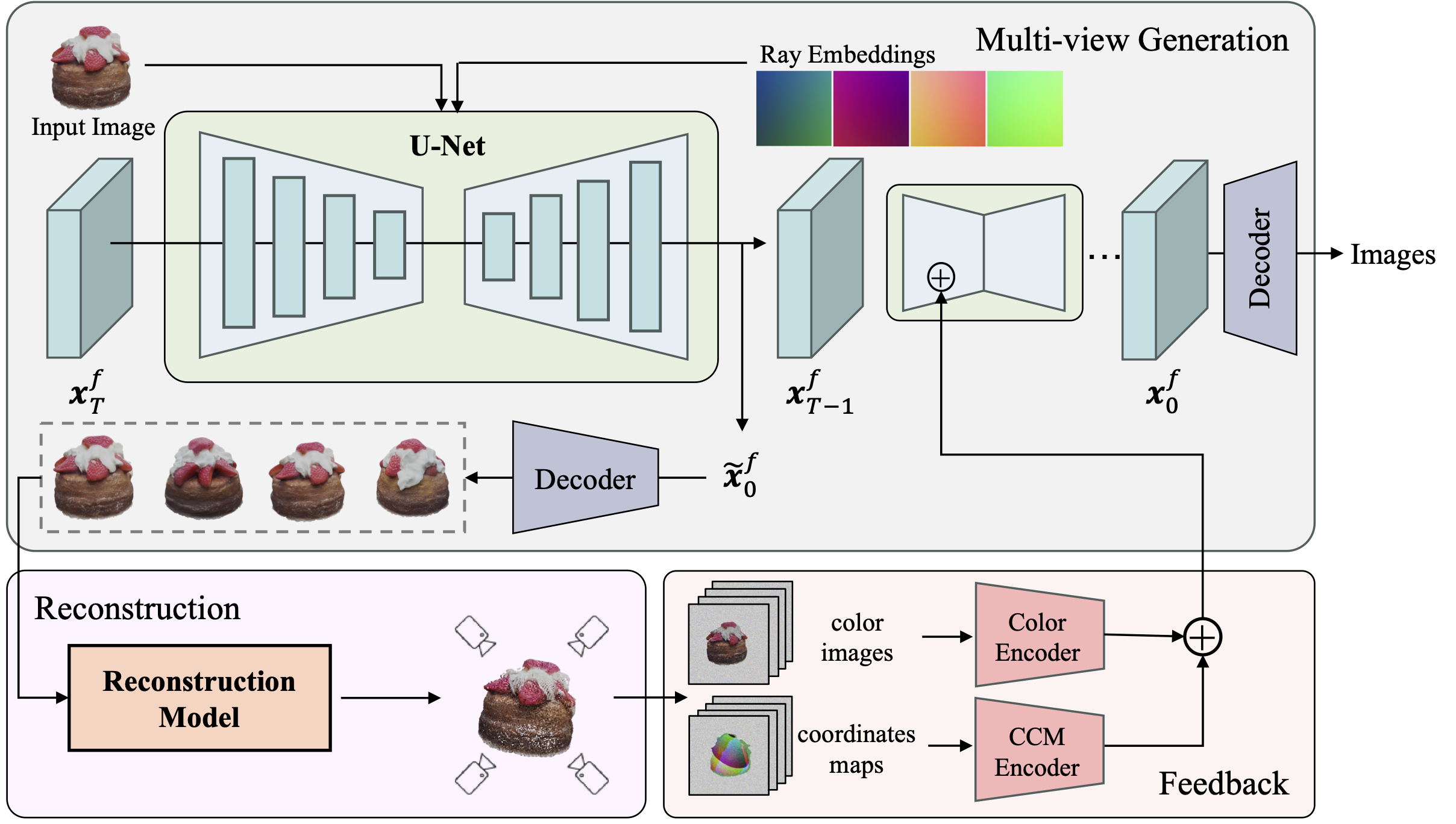

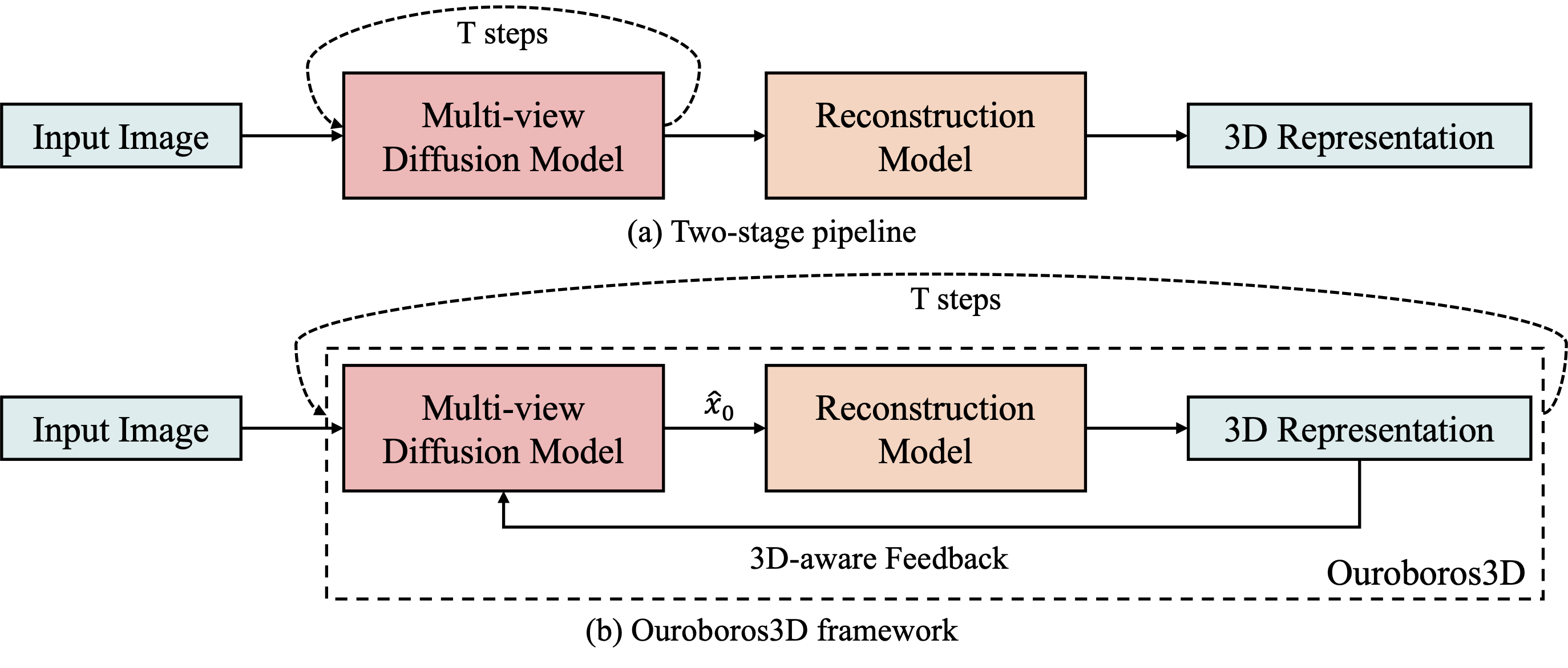

Concept comparison between Ouroboros3D and previous two-stage methods. Instead of directly combining multi-view diffusion model and reconstruction model, our self-conditioned framework involves joint training of these two models and establish them as a recursive association. At each step of the denoising process, the rendered 3D-aware maps are fed to the multi-view generation in the next step.

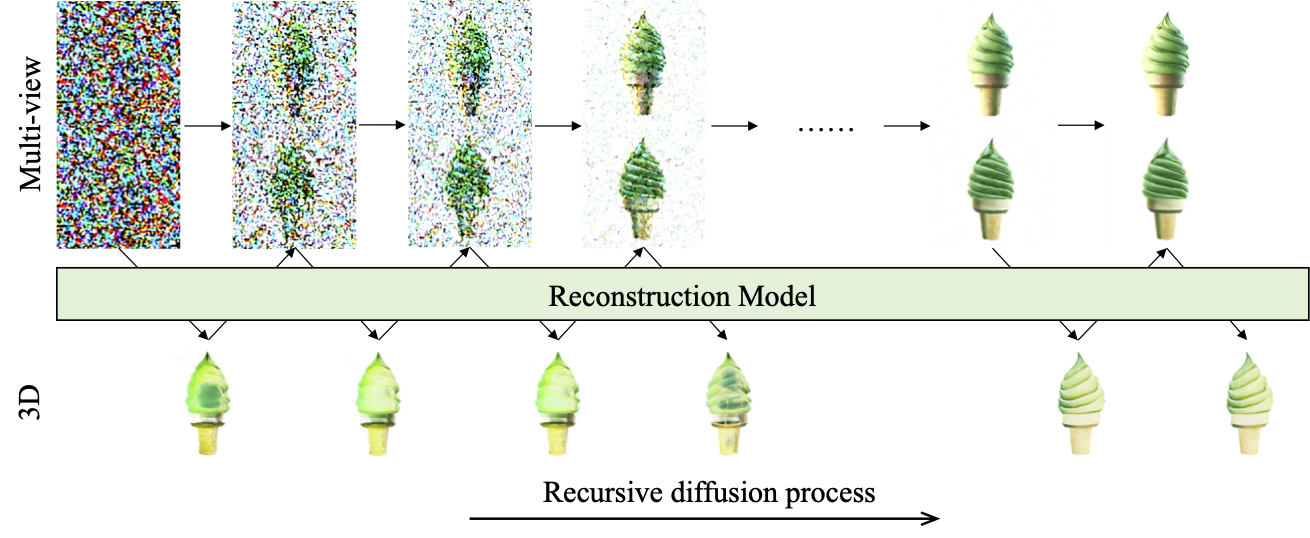

Concept of 3D-aware recursive diffusion. During multi-view denoising, the diffusion model uses 3D-aware maps rendered by the reconstruction module at the previous step as conditions.